3Blue1Brown's Bitcoin Video Briefly Taken Down: When Automated Brand Protection Goes Wrong

- Name

- Tison Brokenshire

Updated on

In a startling episode that highlights the pitfalls of automated copyright enforcement, the popular mathematics YouTuber 3Blue1Brown recently had his 2017 video explaining how Bitcoin works removed from YouTube. The takedown arrived alongside a copyright strike issued on behalf of Arbitrum by a company called ChainPatrol, which specializes in “brand protection” for Web3 companies using AI-based scanning. The good news? The claim turned out to be a false positive. The bad news? It underscored the very real risks that automated systems pose to content creators everywhere.

A Surprise Takedown of an Original Work

3Blue1Brown, real name Grant Sanderson, is well-known for his high-quality math explainer videos. His “How Bitcoin Works” video, published in 2017, had garnered millions of views and has long been lauded as a clear, neutral explanation of Bitcoin’s underlying cryptographic and economic concepts. Earlier this week, however, Sanderson learned that YouTube had taken down the video and issued a copyright strike against his channel.

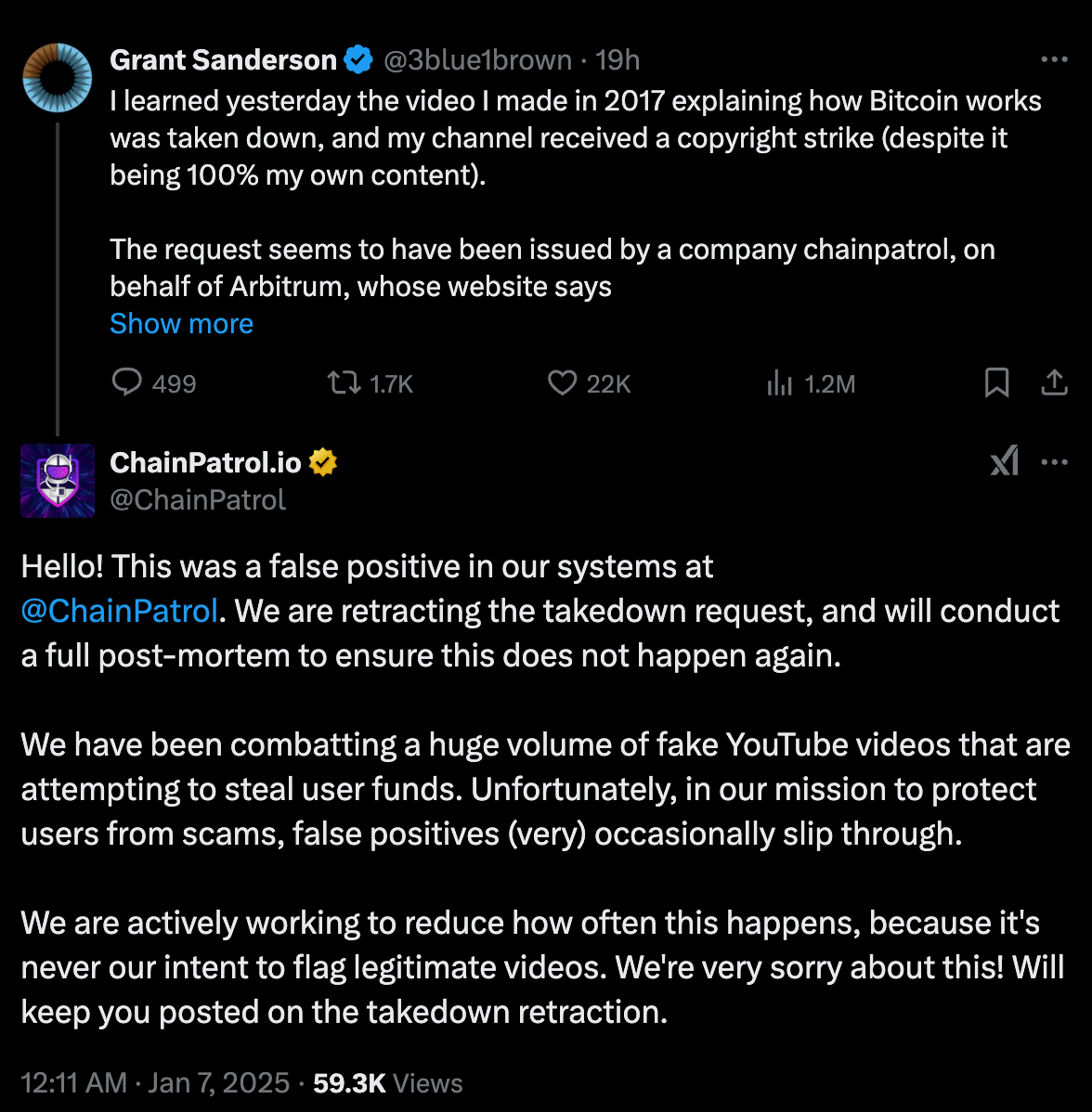

In a Twitter post, 3Blue1Brown detailed how ChainPatrol, acting on behalf of Arbitrum—a project in the Web3 space—claimed his fully original content as infringing. He speculated that an automated system may have mistaken a re-upload of his video (plenty of scammers and copycats do steal popular content) as the supposed “original,” thus flagging the genuine video as a duplicate. Sanderson rightly pointed out the dire consequences of such strikes: “It only takes three to delete an entire channel,” he noted.

ChainPatrol’s Response

ChainPatrol replied publicly on Twitter, calling the removal of 3Blue1Brown’s video a “false positive” and expressing regret over the incident. They emphasized that their mission is to protect users from crypto scams, noting that “in our mission to protect users from scams, false positives (very) occasionally slip through.” They promised to retract the takedown and conduct a thorough review to prevent repeats.

Despite the apology, the incident cast renewed scrutiny on automated brand-protection services that file mass takedown requests. Observers on Hacker News pointed out how these companies wield YouTube’s copyright claim tools not necessarily for “legitimate” copyright protection, but as a quick path to removing suspicious content. In practice, though, the line between “protecting” a brand and overzealous takedowns can blur alarmingly fast.

A Broader Problem With Automated Enforcement

The 3Blue1Brown episode isn’t an isolated problem. According to community reports—and experiences from other creators—it reflects a growing issue with third-party services that submit takedown notices based largely on automated algorithms. These systems scan for brand mentions, copyrighted logos, or suspicious uploads; ironically, high-quality original works can get swept up in the net if the AI misfires.

- Collateral Damage for Smaller Creators: Big channels like 3Blue1Brown at least stand a fighting chance in the court of public opinion. A single Twitter post can rally fans and the media, resulting in a swift correction and apology. Smaller creators or niche communities, however—such as indie Minecraft servers that have faced automated IP/copyright claims—often go unheard. Their appeals might be ignored or tangled in slow-moving processes, risking a total channel shutdown.

- The Minecraft Parallel: Multiple reports indicate that the Minecraft community experienced similar mass-reporting waves, where legitimate gameplay videos got taken down or demonetized because they contained short clips that were incorrectly flagged by automated brand-enforcement AI. The frustration and damage to the community’s creativity was significant.

- Reliance on DMCA Tools vs. YouTube’s Internal Copyright System: On Hacker News, some users debated whether ChainPatrol’s takedown notices amount to perjury under the DMCA—since they would have had to swear under penalty of perjury that the content was infringing. Others pointed out that YouTube’s own system can operate independently of the formal DMCA route, making accountability murky.

What This Means for the Future of Content Creation

- Algorithmic Overreach

- Creators at Risk

- Need for Greater Accountability

- Human-in-the-Loop Verification

A Wake-Up Call

As 3Blue1Brown’s experience shows, even high-profile, wholly original content can fall victim to misguided takedown claims. While ChainPatrol has apologized and retracted the complaint, the outrage from fans and fellow creators underscores how automated brand enforcement can easily transform into a blunt instrument.

It also underscores a more uncomfortable truth: If someone as prominent as 3Blue1Brown can be temporarily knocked off the platform by mistake, smaller creators risk far more devastating consequences. In the wake of this incident, there’s renewed hope that YouTube, brand-protection firms, and regulators will revisit how these copyright and brand-impersonation protocols are enforced.

Conclusion

The brief removal of 3Blue1Brown’s Bitcoin video is a reminder that the modern internet’s automated enforcement systems, while effective against real scams and brand impersonators, can go dangerously astray. When the takedown process is abused—or simply goes haywire—content creators suffer the most. Moving forward, stakeholders from YouTube to brand-protection companies to content creators themselves must advocate for more transparent processes, stricter penalties for erroneous claims, and better human oversight.

Until then, creators large and small will continue to tread carefully, hoping not to become the next casualty of a flawed—and often opaque—takedown regime.