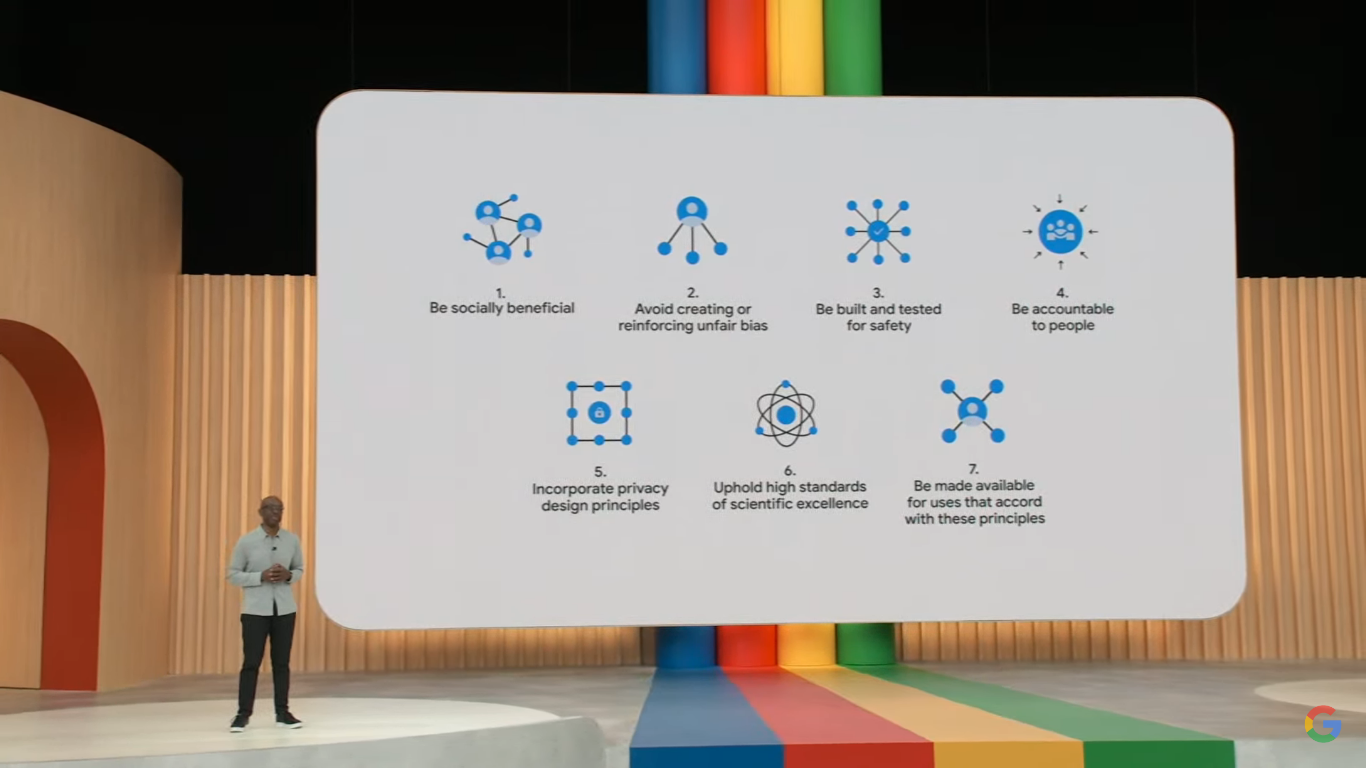

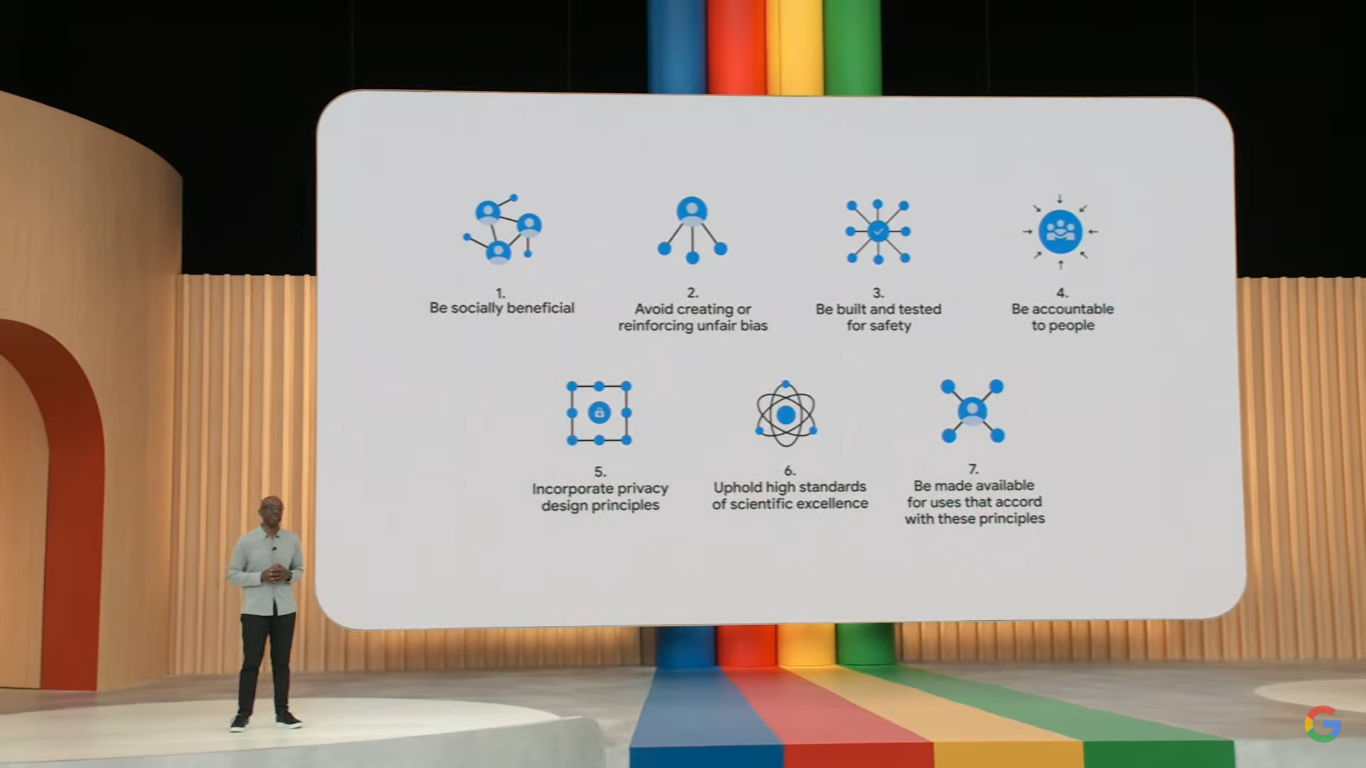

Google's AI Principles: Ethics and Accountability Guidelines

Google AI Principles

1. Be socially beneficial

- Thoughts: AI should prioritize the well-being of individuals and society as a whole. This means considering the potential social impacts of technology.

- Additional Information: Social benefit can be measured through improved quality of life, enhanced access to information, and educational resources.

2. Avoid creating or reinforcing unfair bias

- Thoughts: AI systems must be designed to minimize biases that could adversely affect any group of people.

- Additional Information: This involves diverse data collection and ongoing evaluations to ensure fairness across different demographics.

3. Be built and tested for safety

- Thoughts: Safety in AI refers to both physical and psychological aspects of technology use.

- Additional Information: Testing should include scenarios to assess potential risks before deployment in real environments.

4. Be accountable to people

- Thoughts: There should be clear lines of accountability for AI decisions, allowing users to understand how decisions are made.

- Additional Information: Transparency in AI decision-making enhances trust and allows for accountability measures to be established.

5. Incorporate privacy design principles

- Thoughts: Protecting user privacy should be a foundational aspect of AI design, preventing unauthorized data access and ensuring user consent.

- Additional Information: This can involve implementing encryption, data anonymization, and giving users control over their personal data.

6. Uphold high standards of scientific excellence

- Thoughts: Research and development of AI must adhere to rigorous scientific principles, ensuring validity and reliability.

- Additional Information: This can include peer reviews, transparent methodologies, and reproducibility of research findings in AI.

7. Be made available for uses that accord with these principles

- Thoughts: AI technologies should be accessible only for applications that align with ethical standards and societal values.

- Additional Information: This may require establishing guidelines and review processes for prospective users of AI technologies.

Reference:

www.ignitingbusiness.com

What Is Google's AI Overview (Formerly Search Generative ...

www.carnegiehighered.com

How to Optimize for SGE, Google's AI Feature - Carnegie Dartlet

blog.hubspot.com

How to Use Google's AI Overviews for Search - HubSpot Blog

AI Principles for Responsible Development

-

Be socially beneficial

- AI should prioritize societal benefits

- Consider long-term impacts on individuals and communities

- Align AI development with broader social goals and values

-

Avoid creating or reinforcing unfair bias

- Implement measures to identify and mitigate biases in AI systems

- Ensure diverse and representative data sets for training

- Regularly audit AI outputs for potential discriminatory effects

-

Be built and tested for safety

- Implement rigorous safety protocols in AI development

- Conduct thorough testing for potential risks and vulnerabilities

- Consider both physical and psychological safety aspects

-

Be accountable to people

- Establish clear lines of responsibility for AI decisions

- Implement transparency measures to explain AI processes

- Create mechanisms for user feedback and redress

-

Incorporate privacy design principles

- Prioritize user data protection in AI system design

- Implement strong encryption and data minimization practices

- Ensure informed consent for data collection and use

-

Uphold high standards of scientific excellence

- Adhere to rigorous scientific methodologies in AI research

- Promote peer review and replication of AI studies

- Encourage interdisciplinary collaboration in AI development

-

Be made available for uses that accord with these principles

- Develop clear guidelines for ethical AI applications

- Implement review processes for AI use cases

- Restrict AI deployment to applications aligned with ethical standards

These principles form a comprehensive framework for responsible AI development, addressing key concerns such as social impact, fairness, safety, accountability, privacy, scientific integrity, and ethical use. They provide guidance for AI researchers, developers, and users to ensure that AI technologies benefit society while minimizing potential harm.

Reference:

transcend.io

Key principles for ethical AI development - Transcend.io

www.iso.org

Building a responsible AI: How to manage the AI ethics debate - ISO

media.defense.gov

[PDF] AI Principles: Recommendations on the Ethical Use of Artificial ...